SOLR

Security Considerations

As with the other services on the platform, acces to solr is authenticated. Your authentication on the platform is automatically used. Furthermore, access to solr indexes are restricted (to garanty privacy of sensible data, or accidental modification of another user's data for example).

You are granted unlimited acces to all collections (creation, modification,query, deleting) provided that their names is prefixed by you user id and an '_' character. No one else can access these collections. Collection can be shared using a group name instead of a user id as a collection name prefix. All users in the group receive full access (including modification and deletion) of the data.

For creating or alteration of a group, or more fined grain access (such as read only share) please contact the platform technical officer)

Using solr with Spark

SolrJ is the Java API to communicate with Solr. It can be used with Spark through Lucidworks' spark-solr library. All code used in this Solr indexation example is available on gitub.u-bordeaux.fr

Dependencies Management

Maven

Using Apache Maven as building tool for the project, we need to add spark-solr as a dependency for the project. The dependency will be shipped during the launch process, so we indicate this dependency with the 'provided' scope.

<dependency>

<groupId>com.lucidworks.spark</groupId>

<artifactId>spark-solr</artifactId>

<version>3.5.6</version>

<scope>provided</scope>

</dependency>

We also need to add spark as a dependency of our project, using provided scope.

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.11</artifactId>

<version>2.3.1.3.0.0.0-1634</version>

<scope>provided</scope>

</dependency>

Creating a Collection

If the collection in which the data is to be indexed does not yet exists, it needs to be created. Creating a collection requires the name of the collection to create, the number of shards the data will be splitted into, the number of times each shard will be replicated and how many different shards a single node can host.

Although solr may be able to guess the type of the fields based on the indexed data, you may need to setup the schema of the index before indexing any data. The following method creates a collection using the mentionned collection properties as well as a collection of field (each represented as a Map<String,Object>) to add to the collection schema.`

private static void createCollection(String collection,

int numShards,

int numReplicas,

int maxShardsPerNode,

Collection<Map<String, Object>> fields,

CloudSolrClient client) throws SolrServerException, IOException, InterruptedException {

//create a collection creation request

CollectionAdminRequest.Create req = CollectionAdminRequest.createCollection(collection, numShards, numReplicas);

req.setMaxShardsPerNode(maxShardsPerNode);

//send the request through the client given in parameter

RequestStatusState response = req.processAndWait(client, 180);

//check if the creation of the collection was a success

if (response != RequestStatusState.COMPLETED)

throw new SolrServerException("failed to create collection");

//get the current fields in the schema (might exist because of a previous collection of the same name)

SchemaRequest.Fields fieldsReq = new SchemaRequest.Fields(new ModifiableSolrParams());

Map<String, Map<String, Object>> fieldsResponse = fieldsReq.process(client, collection)

.getFields()

.stream()

.collect(Collectors.toMap(m -> ((String) m.get("name")), m -> m));

//update the collection's schema using the provided field properties

for (Map<String, Object> field : fields) {

SchemaResponse.UpdateResponse updateResponse;

//if the field to add does not already exists

if (!fieldsResponse.containsKey(((String) field.get("name")))) {

//use an AddField request to add the field to the schema with the given properties

SchemaRequest.AddField fieldReq = new SchemaRequest.AddField(field, new ModifiableSolrParams());

updateResponse = fieldReq.process(client, collection);

}else{

//if the field already exist, use a ReplaceField request to replace the current properties of the field

SchemaRequest.ReplaceField fieldReq = new SchemaRequest.ReplaceField(field,new ModifiableSolrParams());

updateResponse = fieldReq.process(client, collection);

}

if (updateResponse.getStatus() != 0) {

throw new SolrServerException("failed to add field");

}

}

}

The following method shows an example of field properties. For each field, the name and type properties are required. The type property define the type of the field according to a fieldType in the collectiion schema. Several fieldTypes for data types included in solr are inherited in each newly created collection basic schema information. For the type name for each of those data types, please refer to the fieldTypes defined in the default schema information. More properties may need to be set on the fields depending on the use case.

private static Collection<Map<String, Object>> gowallaFields() {

ArrayList<Map<String, Object>> fields = new ArrayList<>();

//user id field of type string (StrField)

fields.add(new HashMap<>());

fields.get(0).put("name", "user");

fields.get(0).put("type", "string");

//loc field of type location (LatLonPointSpatialField)

fields.add(new HashMap<>());

fields.get(1).put("name", "loc");

fields.get(1).put("type", "location");

//date field of type pdate (DatePointField)

fields.add(new HashMap<>());

fields.get(2).put("name", "date");

fields.get(2).put("type", "pdate");

return fields;

}

The created collection's data will be divided among numShards shards across the solr cluster. Each shard will be replicated numReplicas times.

Deleting a collection

The following method show how to delete a collection. The data indexed in that collection is lost and will have to be reindexed to be used again with solr.

private static void deleteCollection(String collection, CloudSolrClient client) throws SolrServerException, IOException {

//create a collection deletion request

CollectionAdminRequest.Delete delete = CollectionAdminRequest.deleteCollection(collection);

//send the request through the client given in parameter

CollectionAdminResponse response = delete.process(client);

//check if the deletion of the collection was a success

if(!response.isSuccess()){

throw new SolrServerException("failed to delete existing collection");

}

}

Check the presence of a collection

The following method check whether a collection already exists in solr.

private static boolean exists(String collection, CloudSolrClient client) throws IOException, SolrServerException {

//retrieve the list of collections

List<String> collections = CollectionAdminRequest.listCollections(client);

//check whether any of the collections' name matches the given name

return collections.stream().anyMatch(name -> name.equals(collection));

}

Index documents in Solr

private static void indexToSolr(JavaRDD<SolrInputDocument> documentJavaRDD, String collection, int batchSize, String zkHost, CloudSolrClient client) throws SolrServerException, IOException {

//call spark-solr indexDocs method to index given documents to a collection

SolrSupport.indexDocs(zkHost,collection,batchSize,documentJavaRDD.rdd());

//commit the document

client.commit(collection,true,true);

}

Prepare data to be indexed in solr

The following method gives an example on how to create documents to index into solr from text files. The text data used come from gowalla's checkins data.

private static JavaRDD<SolrInputDocument> parseGowalla(String input) {

//read the text data from the input path given as parameter

JavaRDD<String> textData = JavaSparkContext.fromSparkContext(SparkContext.getOrCreate()).textFile(input);

//associate each line with an id that will be used a solr document Id

JavaPairRDD<String, Long> textDataWithId = textData.zipWithIndex();

//we use flatMap here to create 0 or 1 document per line depending on whether the parsing of the

//line in a document was succesfull

JavaRDD<SolrInputDocument> documents = textDataWithId.flatMap(line -> {

try {

//create a new document

SolrInputDocument doc = new SolrInputDocument();

//splits the line into fields using tab separator

String[] fields = line._1.split("\t");

//parse the latitude field

double lat = Double.parseDouble(fields[2]);

//parse the longitude field

double lon = Double.parseDouble(fields[3]);

//if the parsed values are valid

if (!(lat < -90 || lat > 90) && !(lon < -180 || lon > 180)) {

//parse the userId field

long user = Long.parseLong(fields[0]);

//get the date field

String dateString = fields[1];

//add the userid field to the document

doc.addField("user", user);

//add the location field from the latitude and longitude values

doc.addField("loc", lat + "," + lon);

//add the date field

doc.addField("date", dateString);

//add the document id field

doc.addField("id", line._2.toString());

//return an iterator over a list containing this single document

return Collections.singletonList(doc).iterator();

}

//if the values are not valid, return an emptyIterator

return Collections.emptyIterator();

} catch (NumberFormatException e) {

//if the latitude or longitude could not be parsed, return an emptyIterator

return Collections.emptyIterator();

}

}).repartition(800);

return documents;

}

Read

The following method gets a RDD corresponding to the whole collection.

private SolrJavaRDD read(String collection, SparkContext sc) throws IOException, SolrServerException {

//default method to get a solr rdd with SolrJavaRDD.get(zkHost, collection, sc) bugs with NullPointerException

//due to null SolrParams in the uniquekey request sent to get the unique key field of the collection

//we send this request manually (with empty params)

// to give the unique Key as parametre to the SelectSolrRDD constructor

SchemaRequest.UniqueKey uniqueKey = new SchemaRequest.UniqueKey(new ModifiableSolrParams());

Option<String> uKey = Option.apply(uniqueKey.process(client, collection).getUniqueKey());

//following parameters have default values

Option<String> requestHandler = Option.empty();

Option<String> query = Option.apply(QueryConstants.DEFAULT_QUERY());

Option<String[]> fields = Option.empty();

Option<Object> rows = Option.apply(QueryConstants.DEFAULT_PAGE_SIZE());

Option<String> splitField = Option.empty();

Option<Object> splitsPerShard = Option.empty();

Option<SolrQuery> solrQuery = Option.empty();

Option<Object> maxRows = Option.empty();

Option<SparkSolrAccumulator> accumulator = Option.empty();

//create the SelectSolrRDD corresponding to the collection with the obtained uniqueKey

SolrJavaRDD solrRDD = new SolrJavaRDD(new SelectSolrRDD(zkHost, collection, sc,

requestHandler,

query,

fields,

rows,

splitField,

splitsPerShard,

solrQuery,

uKey,

maxRows,

accumulator));

return solrRDD;

}

You can use the created object as any RDD. You can also use it to issue a query to the collection. The following code snippet illustrates this.

//get a RDD corresponding to the whole collection

SolrJavaRDD indexedDocs = indexer.read(collection, sc);

//SolrJavaRDD class extends JavaRDD<SolrDocument>

//you can thus use usual Spark operation on the SolrJavaRDD instance

//such as counting

System.out.println("Indexed "+indexedDocs.count() + " documents");

//or map transformation

System.out.println(indexedDocs.map(doc -> ((String) doc.getFieldValue("user")))

.distinct()

.count() +" distinct users in collection");

double latBordeaux = 44 + (50 + 16 / 60.0) / 60.0;

double lonBordeaux = 0 + (34 + 46 / 60.0) / 60.0;

double radius = 100000;

//this is an example of filtering the data according to a distance criterion from a specific point on Earth

System.out.println(indexedDocs.filter(doc -> {

double[] latLon = Arrays.stream(((String) doc.getFieldValue("loc")).split(","))

.mapToDouble(Double::parseDouble).toArray();

return greatCircleDistance(latBordeaux,lonBordeaux,latLon[0],latLon[1]) < radius;

}).count() + " checkins from within " + (radius / 1000) + "km of Bordeaux");

//the same can be done using a solr query

//this way the whole dataset does not need to be extracted from the index

//and the capabilities of solr can be used for more efficient filtering

String query = "&q=*:*&fq={!geofilt sfield=loc}&pt=" + latBordeaux

+ "," + lonBordeaux + "&d=" + (radius / 1000);

JavaRDD<SolrDocument> queryResult = indexedDocs.query(query).cache();

System.out.println(queryResult.count() + " checkins from within " + (radius / 1000) + "km of Bordeaux");

Client creation and Zookeeper connection string

Most operations that interact with solr need a CloudSolrClient or a zookeeper connection string(named zkHost in above methods parameter). The former can be create from the later using

SolrSupport.getCachedCloudClient(zkHost);

The zookeeper connection string can be obtained from the configuration by sourcing /etc/solr/conf/solr.in.sh and using the environment variable ZK_HOST as illustrated in the following start script.

Start process

As mentioned previously, using spark-solr on the platform requires shipping the library's code with the project code as well as a Zookeeper connection string to be able to connect to Solr. In addition, your application connections to solr will have to be authenticated.

To that purpose, further configuration and additional dependencies must be collected and given to Spark. The apropropriate configurations and dependency( including spark-solr) are available using solr client configuration directory /etc/solr/conf/ on the platform gateway. The shell script /etc/solr/conf/solr.in.sh define 3 variables :

- ZK_HOST: the zookeeper connection string to use to connect to solr

- SPARK_ADDITIONAL_JARS : additional java dependency to use with spark-submit --jars option

- SPARK_PROPERTY_FILE : spark configuration file containing additional properties specific to the use of solr on the platform The following script illustrate the use of this confiuation to start an indexationof data into solr

source /etc/solr/conf/solr.in.sh

spark-submit \

--deploy-mode cluster \

--class fr.labri.lsd.solr.indexation.java.SolrIndexer \

--jars $SPARK_ADDITIONAL_JARS \

--properties-file $SPARK_PROPERTY_FILE \

--num-executors 2 \

--executor-cores 2 \

~/java-solr-indexation-1.0-jar-with-dependencies.jar \

--input CanopyData/loc-gowalla_totalCheckins.txt \

--collectionName flalanne_gowalla \

--batchSize 10000 \

--zkHost $ZK_HOST \

--create 6 2 2

Default schema

By default, new collection will be created with a schema identical to the following one. It contains useful, preconfigured field types for DataTypes implemented in Solr (see fieldType anchor) that can be used to configured an index's fields.

<?xml version="1.0" encoding="UTF-8" ?>

<!--

Licensed to the Apache Software Foundation (ASF) under one or more

contributor license agreements. See the NOTICE file distributed with

this work for additional information regarding copyright ownership.

The ASF licenses this file to You under the Apache License, Version 2.0

(the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

<!--

This example schema is the recommended starting point for users.

It should be kept correct and concise, usable out-of-the-box.

For more information, on how to customize this file, please see

http://lucene.apache.org/solr/guide/documents-fields-and-schema-design.html

PERFORMANCE NOTE: this schema includes many optional features and should not

be used for benchmarking. To improve performance one could

- set stored="false" for all fields possible (esp large fields) when you

only need to search on the field but don't need to return the original

value.

- set indexed="false" if you don't need to search on the field, but only

return the field as a result of searching on other indexed fields.

- remove all unneeded copyField statements

- for best index size and searching performance, set "index" to false

for all general text fields, use copyField to copy them to the

catchall "text" field, and use that for searching.

-->

<schema name="default-config" version="1.6">

<!-- attribute "name" is the name of this schema and is only used for display purposes.

version="x.y" is Solr's version number for the schema syntax and

semantics. It should not normally be changed by applications.

1.0: multiValued attribute did not exist, all fields are multiValued

by nature

1.1: multiValued attribute introduced, false by default

1.2: omitTermFreqAndPositions attribute introduced, true by default

except for text fields.

1.3: removed optional field compress feature

1.4: autoGeneratePhraseQueries attribute introduced to drive QueryParser

behavior when a single string produces multiple tokens. Defaults

to off for version >= 1.4

1.5: omitNorms defaults to true for primitive field types

(int, float, boolean, string...)

1.6: useDocValuesAsStored defaults to true.

-->

<!-- Valid attributes for fields:

name: mandatory - the name for the field

type: mandatory - the name of a field type from the

fieldTypes section

indexed: true if this field should be indexed (searchable or sortable)

stored: true if this field should be retrievable

docValues: true if this field should have doc values. Doc Values is

recommended (required, if you are using *Point fields) for faceting,

grouping, sorting and function queries. Doc Values will make the index

faster to load, more NRT-friendly and more memory-efficient.

They are currently only supported by StrField, UUIDField, all

*PointFields, and depending on the field type, they might require

the field to be single-valued, be required or have a default value

(check the documentation of the field type you're interested in for

more information)

multiValued: true if this field may contain multiple values per document

omitNorms: (expert) set to true to omit the norms associated with

this field (this disables length normalization and index-time

boosting for the field, and saves some memory). Only full-text

fields or fields that need an index-time boost need norms.

Norms are omitted for primitive (non-analyzed) types by default.

termVectors: [false] set to true to store the term vector for a

given field.

When using MoreLikeThis, fields used for similarity should be

stored for best performance.

termPositions: Store position information with the term vector.

This will increase storage costs.

termOffsets: Store offset information with the term vector. This

will increase storage costs.

required: The field is required. It will throw an error if the

value does not exist

default: a value that should be used if no value is specified

when adding a document.

-->

<!-- field names should consist of alphanumeric or underscore characters only and

not start with a digit. This is not currently strictly enforced,

but other field names will not have first class support from all components

and back compatibility is not guaranteed. Names with both leading and

trailing underscores (e.g. _version_) are reserved.

-->

<!-- In this _default configset, only four fields are pre-declared:

id, _version_, and _text_ and _root_. All other fields will be type guessed and added via the

"add-unknown-fields-to-the-schema" update request processor chain declared in solrconfig.xml.

Note that many dynamic fields are also defined - you can use them to specify a

field's type via field naming conventions - see below.

WARNING: The _text_ catch-all field will significantly increase your index size.

If you don't need it, consider removing it and the corresponding copyField directive.

-->

<field name="id" type="string" indexed="true" stored="true" required="true" multiValued="false" />

<!-- docValues are enabled by default for long type so we don't need to index the version field -->

<field name="_version_" type="plong" indexed="false" stored="false"/>

<field name="_root_" type="string" indexed="true" stored="false" docValues="false" />

<field name="_text_" type="text_general" indexed="true" stored="false" multiValued="true"/>

<!-- This can be enabled, in case the client does not know what fields may be searched. It isn't enabled by default

because it's very expensive to index everything twice. -->

<!-- <copyField source="*" dest="_text_"/> -->

<!-- Dynamic field definitions allow using convention over configuration

for fields via the specification of patterns to match field names.

EXAMPLE: name="*_i" will match any field ending in _i (like myid_i, z_i)

RESTRICTION: the glob-like pattern in the name attribute must have a "*" only at the start or the end. -->

<dynamicField name="*_i" type="pint" indexed="true" stored="true"/>

<dynamicField name="*_is" type="pints" indexed="true" stored="true"/>

<dynamicField name="*_s" type="string" indexed="true" stored="true" />

<dynamicField name="*_ss" type="strings" indexed="true" stored="true"/>

<dynamicField name="*_l" type="plong" indexed="true" stored="true"/>

<dynamicField name="*_ls" type="plongs" indexed="true" stored="true"/>

<dynamicField name="*_t" type="text_general" indexed="true" stored="true" multiValued="false"/>

<dynamicField name="*_txt" type="text_general" indexed="true" stored="true"/>

<dynamicField name="*_b" type="boolean" indexed="true" stored="true"/>

<dynamicField name="*_bs" type="booleans" indexed="true" stored="true"/>

<dynamicField name="*_f" type="pfloat" indexed="true" stored="true"/>

<dynamicField name="*_fs" type="pfloats" indexed="true" stored="true"/>

<dynamicField name="*_d" type="pdouble" indexed="true" stored="true"/>

<dynamicField name="*_ds" type="pdoubles" indexed="true" stored="true"/>

<dynamicField name="random_*" type="random"/>

<!-- Type used for data-driven schema, to add a string copy for each text field -->

<dynamicField name="*_str" type="strings" stored="false" docValues="true" indexed="false" />

<dynamicField name="*_dt" type="pdate" indexed="true" stored="true"/>

<dynamicField name="*_dts" type="pdate" indexed="true" stored="true" multiValued="true"/>

<dynamicField name="*_p" type="location" indexed="true" stored="true"/>

<dynamicField name="*_srpt" type="location_rpt" indexed="true" stored="true"/>

<!-- payloaded dynamic fields -->

<dynamicField name="*_dpf" type="delimited_payloads_float" indexed="true" stored="true"/>

<dynamicField name="*_dpi" type="delimited_payloads_int" indexed="true" stored="true"/>

<dynamicField name="*_dps" type="delimited_payloads_string" indexed="true" stored="true"/>

<dynamicField name="attr_*" type="text_general" indexed="true" stored="true" multiValued="true"/>

<!-- Field to use to determine and enforce document uniqueness.

Unless this field is marked with required="false", it will be a required field

-->

<uniqueKey>id</uniqueKey>

<!-- copyField commands copy one field to another at the time a document

is added to the index. It's used either to index the same field differently,

or to add multiple fields to the same field for easier/faster searching.

<copyField source="sourceFieldName" dest="destinationFieldName"/>

-->

<!-- field type definitions. The "name" attribute is

just a label to be used by field definitions. The "class"

attribute and any other attributes determine the real

behavior of the fieldType.

Class names starting with "solr" refer to java classes in a

standard package such as org.apache.solr.analysis

-->

<!-- sortMissingLast and sortMissingFirst attributes are optional attributes are

currently supported on types that are sorted internally as strings

and on numeric types.

This includes "string", "boolean", "pint", "pfloat", "plong", "pdate", "pdouble".

- If sortMissingLast="true", then a sort on this field will cause documents

without the field to come after documents with the field,

regardless of the requested sort order (asc or desc).

- If sortMissingFirst="true", then a sort on this field will cause documents

without the field to come before documents with the field,

regardless of the requested sort order.

- If sortMissingLast="false" and sortMissingFirst="false" (the default),

then default lucene sorting will be used which places docs without the

field first in an ascending sort and last in a descending sort.

-->

<!-- The StrField type is not analyzed, but indexed/stored verbatim. -->

<fieldType name="string" class="solr.StrField" sortMissingLast="true" docValues="true" />

<fieldType name="strings" class="solr.StrField" sortMissingLast="true" multiValued="true" docValues="true" />

<!-- boolean type: "true" or "false" -->

<fieldType name="boolean" class="solr.BoolField" sortMissingLast="true"/>

<fieldType name="booleans" class="solr.BoolField" sortMissingLast="true" multiValued="true"/>

<!--

Numeric field types that index values using KD-trees.

Point fields don't support FieldCache, so they must have docValues="true" if needed for sorting, faceting, functions, etc.

-->

<fieldType name="pint" class="solr.IntPointField" docValues="true"/>

<fieldType name="pfloat" class="solr.FloatPointField" docValues="true"/>

<fieldType name="plong" class="solr.LongPointField" docValues="true"/>

<fieldType name="pdouble" class="solr.DoublePointField" docValues="true"/>

<fieldType name="pints" class="solr.IntPointField" docValues="true" multiValued="true"/>

<fieldType name="pfloats" class="solr.FloatPointField" docValues="true" multiValued="true"/>

<fieldType name="plongs" class="solr.LongPointField" docValues="true" multiValued="true"/>

<fieldType name="pdoubles" class="solr.DoublePointField" docValues="true" multiValued="true"/>

<fieldType name="random" class="solr.RandomSortField" indexed="true"/>

<!-- The format for this date field is of the form 1995-12-31T23:59:59Z, and

is a more restricted form of the canonical representation of dateTime

http://www.w3.org/TR/xmlschema-2/#dateTime

The trailing "Z" designates UTC time and is mandatory.

Optional fractional seconds are allowed: 1995-12-31T23:59:59.999Z

All other components are mandatory.

Expressions can also be used to denote calculations that should be

performed relative to "NOW" to determine the value, ie...

NOW/HOUR

... Round to the start of the current hour

NOW-1DAY

... Exactly 1 day prior to now

NOW/DAY+6MONTHS+3DAYS

... 6 months and 3 days in the future from the start of

the current day

-->

<!-- KD-tree versions of date fields -->

<fieldType name="pdate" class="solr.DatePointField" docValues="true"/>

<fieldType name="pdates" class="solr.DatePointField" docValues="true" multiValued="true"/>

<!--Binary data type. The data should be sent/retrieved in as Base64 encoded Strings -->

<fieldType name="binary" class="solr.BinaryField"/>

<!-- solr.TextField allows the specification of custom text analyzers

specified as a tokenizer and a list of token filters. Different

analyzers may be specified for indexing and querying.

The optional positionIncrementGap puts space between multiple fields of

this type on the same document, with the purpose of preventing false phrase

matching across fields.

For more info on customizing your analyzer chain, please see

http://lucene.apache.org/solr/guide/understanding-analyzers-tokenizers-and-filters.html#understanding-analyzers-tokenizers-and-filters

-->

<!-- One can also specify an existing Analyzer class that has a

default constructor via the class attribute on the analyzer element.

Example:

<fieldType name="text_greek" class="solr.TextField">

<analyzer class="org.apache.lucene.analysis.el.GreekAnalyzer"/>

</fieldType>

-->

<!-- A text field that only splits on whitespace for exact matching of words -->

<dynamicField name="*_ws" type="text_ws" indexed="true" stored="true"/>

<fieldType name="text_ws" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.WhitespaceTokenizerFactory"/>

</analyzer>

</fieldType>

<!-- A general text field that has reasonable, generic

cross-language defaults: it tokenizes with StandardTokenizer,

removes stop words from case-insensitive "stopwords.txt"

(empty by default), and down cases. At query time only, it

also applies synonyms.

-->

<fieldType name="text_general" class="solr.TextField" positionIncrementGap="100" multiValued="true">

<analyzer type="index">

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="stopwords.txt" />

<!-- in this example, we will only use synonyms at query time

<filter class="solr.SynonymGraphFilterFactory" synonyms="index_synonyms.txt" ignoreCase="true" expand="false"/>

<filter class="solr.FlattenGraphFilterFactory"/>

-->

<filter class="solr.LowerCaseFilterFactory"/>

</analyzer>

<analyzer type="query">

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="stopwords.txt" />

<filter class="solr.SynonymGraphFilterFactory" synonyms="synonyms.txt" ignoreCase="true" expand="true"/>

<filter class="solr.LowerCaseFilterFactory"/>

</analyzer>

</fieldType>

<!-- SortableTextField generaly functions exactly like TextField,

except that it supports, and by default uses, docValues for sorting (or faceting)

on the first 1024 characters of the original field values (which is configurable).

This makes it a bit more useful then TextField in many situations, but the trade-off

is that it takes up more space on disk; which is why it's not used in place of TextField

for every fieldType in this _default schema.

-->

<dynamicField name="*_t_sort" type="text_gen_sort" indexed="true" stored="true" multiValued="false"/>

<dynamicField name="*_txt_sort" type="text_gen_sort" indexed="true" stored="true"/>

<fieldType name="text_gen_sort" class="solr.SortableTextField" positionIncrementGap="100" multiValued="true">

<analyzer type="index">

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="stopwords.txt" />

<filter class="solr.LowerCaseFilterFactory"/>

</analyzer>

<analyzer type="query">

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="stopwords.txt" />

<filter class="solr.SynonymGraphFilterFactory" synonyms="synonyms.txt" ignoreCase="true" expand="true"/>

<filter class="solr.LowerCaseFilterFactory"/>

</analyzer>

</fieldType>

<!-- A text field with defaults appropriate for English: it tokenizes with StandardTokenizer,

removes English stop words (lang/stopwords_en.txt), down cases, protects words from protwords.txt, and

finally applies Porter's stemming. The query time analyzer also applies synonyms from synonyms.txt. -->

<dynamicField name="*_txt_en" type="text_en" indexed="true" stored="true"/>

<fieldType name="text_en" class="solr.TextField" positionIncrementGap="100">

<analyzer type="index">

<tokenizer class="solr.StandardTokenizerFactory"/>

<!-- in this example, we will only use synonyms at query time

<filter class="solr.SynonymGraphFilterFactory" synonyms="index_synonyms.txt" ignoreCase="true" expand="false"/>

<filter class="solr.FlattenGraphFilterFactory"/>

-->

<!-- Case insensitive stop word removal.

-->

<filter class="solr.StopFilterFactory"

ignoreCase="true"

words="lang/stopwords_en.txt"

/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.EnglishPossessiveFilterFactory"/>

<filter class="solr.KeywordMarkerFilterFactory" protected="protwords.txt"/>

<!-- Optionally you may want to use this less aggressive stemmer instead of PorterStemFilterFactory:

<filter class="solr.EnglishMinimalStemFilterFactory"/>

-->

<filter class="solr.PorterStemFilterFactory"/>

</analyzer>

<analyzer type="query">

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.SynonymGraphFilterFactory" synonyms="synonyms.txt" ignoreCase="true" expand="true"/>

<filter class="solr.StopFilterFactory"

ignoreCase="true"

words="lang/stopwords_en.txt"

/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.EnglishPossessiveFilterFactory"/>

<filter class="solr.KeywordMarkerFilterFactory" protected="protwords.txt"/>

<!-- Optionally you may want to use this less aggressive stemmer instead of PorterStemFilterFactory:

<filter class="solr.EnglishMinimalStemFilterFactory"/>

-->

<filter class="solr.PorterStemFilterFactory"/>

</analyzer>

</fieldType>

<!-- A text field with defaults appropriate for English, plus

aggressive word-splitting and autophrase features enabled.

This field is just like text_en, except it adds

WordDelimiterGraphFilter to enable splitting and matching of

words on case-change, alpha numeric boundaries, and

non-alphanumeric chars. This means certain compound word

cases will work, for example query "wi fi" will match

document "WiFi" or "wi-fi".

-->

<dynamicField name="*_txt_en_split" type="text_en_splitting" indexed="true" stored="true"/>

<fieldType name="text_en_splitting" class="solr.TextField" positionIncrementGap="100" autoGeneratePhraseQueries="true">

<analyzer type="index">

<tokenizer class="solr.WhitespaceTokenizerFactory"/>

<!-- in this example, we will only use synonyms at query time

<filter class="solr.SynonymGraphFilterFactory" synonyms="index_synonyms.txt" ignoreCase="true" expand="false"/>

-->

<!-- Case insensitive stop word removal.

-->

<filter class="solr.StopFilterFactory"

ignoreCase="true"

words="lang/stopwords_en.txt"

/>

<filter class="solr.WordDelimiterGraphFilterFactory" generateWordParts="1" generateNumberParts="1" catenateWords="1" catenateNumbers="1" catenateAll="0" splitOnCaseChange="1"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.KeywordMarkerFilterFactory" protected="protwords.txt"/>

<filter class="solr.PorterStemFilterFactory"/>

<filter class="solr.FlattenGraphFilterFactory" />

</analyzer>

<analyzer type="query">

<tokenizer class="solr.WhitespaceTokenizerFactory"/>

<filter class="solr.SynonymGraphFilterFactory" synonyms="synonyms.txt" ignoreCase="true" expand="true"/>

<filter class="solr.StopFilterFactory"

ignoreCase="true"

words="lang/stopwords_en.txt"

/>

<filter class="solr.WordDelimiterGraphFilterFactory" generateWordParts="1" generateNumberParts="1" catenateWords="0" catenateNumbers="0" catenateAll="0" splitOnCaseChange="1"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.KeywordMarkerFilterFactory" protected="protwords.txt"/>

<filter class="solr.PorterStemFilterFactory"/>

</analyzer>

</fieldType>

<!-- Less flexible matching, but less false matches. Probably not ideal for product names,

but may be good for SKUs. Can insert dashes in the wrong place and still match. -->

<dynamicField name="*_txt_en_split_tight" type="text_en_splitting_tight" indexed="true" stored="true"/>

<fieldType name="text_en_splitting_tight" class="solr.TextField" positionIncrementGap="100" autoGeneratePhraseQueries="true">

<analyzer type="index">

<tokenizer class="solr.WhitespaceTokenizerFactory"/>

<filter class="solr.SynonymGraphFilterFactory" synonyms="synonyms.txt" ignoreCase="true" expand="false"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_en.txt"/>

<filter class="solr.WordDelimiterGraphFilterFactory" generateWordParts="0" generateNumberParts="0" catenateWords="1" catenateNumbers="1" catenateAll="0"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.KeywordMarkerFilterFactory" protected="protwords.txt"/>

<filter class="solr.EnglishMinimalStemFilterFactory"/>

<!-- this filter can remove any duplicate tokens that appear at the same position - sometimes

possible with WordDelimiterGraphFilter in conjuncton with stemming. -->

<filter class="solr.RemoveDuplicatesTokenFilterFactory"/>

<filter class="solr.FlattenGraphFilterFactory" />

</analyzer>

<analyzer type="query">

<tokenizer class="solr.WhitespaceTokenizerFactory"/>

<filter class="solr.SynonymGraphFilterFactory" synonyms="synonyms.txt" ignoreCase="true" expand="false"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_en.txt"/>

<filter class="solr.WordDelimiterGraphFilterFactory" generateWordParts="0" generateNumberParts="0" catenateWords="1" catenateNumbers="1" catenateAll="0"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.KeywordMarkerFilterFactory" protected="protwords.txt"/>

<filter class="solr.EnglishMinimalStemFilterFactory"/>

<!-- this filter can remove any duplicate tokens that appear at the same position - sometimes

possible with WordDelimiterGraphFilter in conjuncton with stemming. -->

<filter class="solr.RemoveDuplicatesTokenFilterFactory"/>

</analyzer>

</fieldType>

<!-- Just like text_general except it reverses the characters of

each token, to enable more efficient leading wildcard queries.

-->

<dynamicField name="*_txt_rev" type="text_general_rev" indexed="true" stored="true"/>

<fieldType name="text_general_rev" class="solr.TextField" positionIncrementGap="100">

<analyzer type="index">

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="stopwords.txt" />

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.ReversedWildcardFilterFactory" withOriginal="true"

maxPosAsterisk="3" maxPosQuestion="2" maxFractionAsterisk="0.33"/>

</analyzer>

<analyzer type="query">

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.SynonymGraphFilterFactory" synonyms="synonyms.txt" ignoreCase="true" expand="true"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="stopwords.txt" />

<filter class="solr.LowerCaseFilterFactory"/>

</analyzer>

</fieldType>

<dynamicField name="*_phon_en" type="phonetic_en" indexed="true" stored="true"/>

<fieldType name="phonetic_en" stored="false" indexed="true" class="solr.TextField" >

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.DoubleMetaphoneFilterFactory" inject="false"/>

</analyzer>

</fieldType>

<!-- lowercases the entire field value, keeping it as a single token. -->

<dynamicField name="*_s_lower" type="lowercase" indexed="true" stored="true"/>

<fieldType name="lowercase" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.KeywordTokenizerFactory"/>

<filter class="solr.LowerCaseFilterFactory" />

</analyzer>

</fieldType>

<!--

Example of using PathHierarchyTokenizerFactory at index time, so

queries for paths match documents at that path, or in descendent paths

-->

<dynamicField name="*_descendent_path" type="descendent_path" indexed="true" stored="true"/>

<fieldType name="descendent_path" class="solr.TextField">

<analyzer type="index">

<tokenizer class="solr.PathHierarchyTokenizerFactory" delimiter="/" />

</analyzer>

<analyzer type="query">

<tokenizer class="solr.KeywordTokenizerFactory" />

</analyzer>

</fieldType>

<!--

Example of using PathHierarchyTokenizerFactory at query time, so

queries for paths match documents at that path, or in ancestor paths

-->

<dynamicField name="*_ancestor_path" type="ancestor_path" indexed="true" stored="true"/>

<fieldType name="ancestor_path" class="solr.TextField">

<analyzer type="index">

<tokenizer class="solr.KeywordTokenizerFactory" />

</analyzer>

<analyzer type="query">

<tokenizer class="solr.PathHierarchyTokenizerFactory" delimiter="/" />

</analyzer>

</fieldType>

<!-- This point type indexes the coordinates as separate fields (subFields)

If subFieldType is defined, it references a type, and a dynamic field

definition is created matching *___<typename>. Alternately, if

subFieldSuffix is defined, that is used to create the subFields.

Example: if subFieldType="double", then the coordinates would be

indexed in fields myloc_0___double,myloc_1___double.

Example: if subFieldSuffix="_d" then the coordinates would be indexed

in fields myloc_0_d,myloc_1_d

The subFields are an implementation detail of the fieldType, and end

users normally should not need to know about them.

-->

<dynamicField name="*_point" type="point" indexed="true" stored="true"/>

<fieldType name="point" class="solr.PointType" dimension="2" subFieldSuffix="_d"/>

<!-- A specialized field for geospatial search filters and distance sorting. -->

<fieldType name="location" class="solr.LatLonPointSpatialField" docValues="true"/>

<!-- A geospatial field type that supports multiValued and polygon shapes.

For more information about this and other spatial fields see:

http://lucene.apache.org/solr/guide/spatial-search.html

-->

<fieldType name="location_rpt" class="solr.SpatialRecursivePrefixTreeFieldType"

geo="true" distErrPct="0.025" maxDistErr="0.001" distanceUnits="kilometers" />

<!-- Payloaded field types -->

<fieldType name="delimited_payloads_float" stored="false" indexed="true" class="solr.TextField">

<analyzer>

<tokenizer class="solr.WhitespaceTokenizerFactory"/>

<filter class="solr.DelimitedPayloadTokenFilterFactory" encoder="float"/>

</analyzer>

</fieldType>

<fieldType name="delimited_payloads_int" stored="false" indexed="true" class="solr.TextField">

<analyzer>

<tokenizer class="solr.WhitespaceTokenizerFactory"/>

<filter class="solr.DelimitedPayloadTokenFilterFactory" encoder="integer"/>

</analyzer>

</fieldType>

<fieldType name="delimited_payloads_string" stored="false" indexed="true" class="solr.TextField">

<analyzer>

<tokenizer class="solr.WhitespaceTokenizerFactory"/>

<filter class="solr.DelimitedPayloadTokenFilterFactory" encoder="identity"/>

</analyzer>

</fieldType>

<!-- some examples for different languages (generally ordered by ISO code) -->

<!-- Arabic -->

<dynamicField name="*_txt_ar" type="text_ar" indexed="true" stored="true"/>

<fieldType name="text_ar" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<!-- for any non-arabic -->

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_ar.txt" />

<!-- normalizes ﻯ to ﻱ, etc -->

<filter class="solr.ArabicNormalizationFilterFactory"/>

<filter class="solr.ArabicStemFilterFactory"/>

</analyzer>

</fieldType>

<!-- Bulgarian -->

<dynamicField name="*_txt_bg" type="text_bg" indexed="true" stored="true"/>

<fieldType name="text_bg" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_bg.txt" />

<filter class="solr.BulgarianStemFilterFactory"/>

</analyzer>

</fieldType>

<!-- Catalan -->

<dynamicField name="*_txt_ca" type="text_ca" indexed="true" stored="true"/>

<fieldType name="text_ca" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<!-- removes l', etc -->

<filter class="solr.ElisionFilterFactory" ignoreCase="true" articles="lang/contractions_ca.txt"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_ca.txt" />

<filter class="solr.SnowballPorterFilterFactory" language="Catalan"/>

</analyzer>

</fieldType>

<!-- CJK bigram (see text_ja for a Japanese configuration using morphological analysis) -->

<dynamicField name="*_txt_cjk" type="text_cjk" indexed="true" stored="true"/>

<fieldType name="text_cjk" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<!-- normalize width before bigram, as e.g. half-width dakuten combine -->

<filter class="solr.CJKWidthFilterFactory"/>

<!-- for any non-CJK -->

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.CJKBigramFilterFactory"/>

</analyzer>

</fieldType>

<!-- Czech -->

<dynamicField name="*_txt_cz" type="text_cz" indexed="true" stored="true"/>

<fieldType name="text_cz" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_cz.txt" />

<filter class="solr.CzechStemFilterFactory"/>

</analyzer>

</fieldType>

<!-- Danish -->

<dynamicField name="*_txt_da" type="text_da" indexed="true" stored="true"/>

<fieldType name="text_da" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_da.txt" format="snowball" />

<filter class="solr.SnowballPorterFilterFactory" language="Danish"/>

</analyzer>

</fieldType>

<!-- German -->

<dynamicField name="*_txt_de" type="text_de" indexed="true" stored="true"/>

<fieldType name="text_de" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_de.txt" format="snowball" />

<filter class="solr.GermanNormalizationFilterFactory"/>

<filter class="solr.GermanLightStemFilterFactory"/>

<!-- less aggressive: <filter class="solr.GermanMinimalStemFilterFactory"/> -->

<!-- more aggressive: <filter class="solr.SnowballPorterFilterFactory" language="German2"/> -->

</analyzer>

</fieldType>

<!-- Greek -->

<dynamicField name="*_txt_el" type="text_el" indexed="true" stored="true"/>

<fieldType name="text_el" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<!-- greek specific lowercase for sigma -->

<filter class="solr.GreekLowerCaseFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="false" words="lang/stopwords_el.txt" />

<filter class="solr.GreekStemFilterFactory"/>

</analyzer>

</fieldType>

<!-- Spanish -->

<dynamicField name="*_txt_es" type="text_es" indexed="true" stored="true"/>

<fieldType name="text_es" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_es.txt" format="snowball" />

<filter class="solr.SpanishLightStemFilterFactory"/>

<!-- more aggressive: <filter class="solr.SnowballPorterFilterFactory" language="Spanish"/> -->

</analyzer>

</fieldType>

<!-- Basque -->

<dynamicField name="*_txt_eu" type="text_eu" indexed="true" stored="true"/>

<fieldType name="text_eu" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_eu.txt" />

<filter class="solr.SnowballPorterFilterFactory" language="Basque"/>

</analyzer>

</fieldType>

<!-- Persian -->

<dynamicField name="*_txt_fa" type="text_fa" indexed="true" stored="true"/>

<fieldType name="text_fa" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<!-- for ZWNJ -->

<charFilter class="solr.PersianCharFilterFactory"/>

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.ArabicNormalizationFilterFactory"/>

<filter class="solr.PersianNormalizationFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_fa.txt" />

</analyzer>

</fieldType>

<!-- Finnish -->

<dynamicField name="*_txt_fi" type="text_fi" indexed="true" stored="true"/>

<fieldType name="text_fi" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_fi.txt" format="snowball" />

<filter class="solr.SnowballPorterFilterFactory" language="Finnish"/>

<!-- less aggressive: <filter class="solr.FinnishLightStemFilterFactory"/> -->

</analyzer>

</fieldType>

<!-- French -->

<dynamicField name="*_txt_fr" type="text_fr" indexed="true" stored="true"/>

<fieldType name="text_fr" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<!-- removes l', etc -->

<filter class="solr.ElisionFilterFactory" ignoreCase="true" articles="lang/contractions_fr.txt"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_fr.txt" format="snowball" />

<filter class="solr.FrenchLightStemFilterFactory"/>

<!-- less aggressive: <filter class="solr.FrenchMinimalStemFilterFactory"/> -->

<!-- more aggressive: <filter class="solr.SnowballPorterFilterFactory" language="French"/> -->

</analyzer>

</fieldType>

<!-- Irish -->

<dynamicField name="*_txt_ga" type="text_ga" indexed="true" stored="true"/>

<fieldType name="text_ga" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<!-- removes d', etc -->

<filter class="solr.ElisionFilterFactory" ignoreCase="true" articles="lang/contractions_ga.txt"/>

<!-- removes n-, etc. position increments is intentionally false! -->

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/hyphenations_ga.txt"/>

<filter class="solr.IrishLowerCaseFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_ga.txt"/>

<filter class="solr.SnowballPorterFilterFactory" language="Irish"/>

</analyzer>

</fieldType>

<!-- Galician -->

<dynamicField name="*_txt_gl" type="text_gl" indexed="true" stored="true"/>

<fieldType name="text_gl" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_gl.txt" />

<filter class="solr.GalicianStemFilterFactory"/>

<!-- less aggressive: <filter class="solr.GalicianMinimalStemFilterFactory"/> -->

</analyzer>

</fieldType>

<!-- Hindi -->

<dynamicField name="*_txt_hi" type="text_hi" indexed="true" stored="true"/>

<fieldType name="text_hi" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.LowerCaseFilterFactory"/>

<!-- normalizes unicode representation -->

<filter class="solr.IndicNormalizationFilterFactory"/>

<!-- normalizes variation in spelling -->

<filter class="solr.HindiNormalizationFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_hi.txt" />

<filter class="solr.HindiStemFilterFactory"/>

</analyzer>

</fieldType>

<!-- Hungarian -->

<dynamicField name="*_txt_hu" type="text_hu" indexed="true" stored="true"/>

<fieldType name="text_hu" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_hu.txt" format="snowball" />

<filter class="solr.SnowballPorterFilterFactory" language="Hungarian"/>

<!-- less aggressive: <filter class="solr.HungarianLightStemFilterFactory"/> -->

</analyzer>

</fieldType>

<!-- Armenian -->

<dynamicField name="*_txt_hy" type="text_hy" indexed="true" stored="true"/>

<fieldType name="text_hy" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_hy.txt" />

<filter class="solr.SnowballPorterFilterFactory" language="Armenian"/>

</analyzer>

</fieldType>

<!-- Indonesian -->

<dynamicField name="*_txt_id" type="text_id" indexed="true" stored="true"/>

<fieldType name="text_id" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_id.txt" />

<!-- for a less aggressive approach (only inflectional suffixes), set stemDerivational to false -->

<filter class="solr.IndonesianStemFilterFactory" stemDerivational="true"/>

</analyzer>

</fieldType>

<!-- Italian -->

<dynamicField name="*_txt_it" type="text_it" indexed="true" stored="true"/>

<fieldType name="text_it" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<!-- removes l', etc -->

<filter class="solr.ElisionFilterFactory" ignoreCase="true" articles="lang/contractions_it.txt"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_it.txt" format="snowball" />

<filter class="solr.ItalianLightStemFilterFactory"/>

<!-- more aggressive: <filter class="solr.SnowballPorterFilterFactory" language="Italian"/> -->

</analyzer>

</fieldType>

<!-- Japanese using morphological analysis (see text_cjk for a configuration using bigramming)

NOTE: If you want to optimize search for precision, use default operator AND in your request

handler config (q.op) Use OR if you would like to optimize for recall (default).

-->

<dynamicField name="*_txt_ja" type="text_ja" indexed="true" stored="true"/>

<fieldType name="text_ja" class="solr.TextField" positionIncrementGap="100" autoGeneratePhraseQueries="false">

<analyzer>

<!-- Kuromoji Japanese morphological analyzer/tokenizer (JapaneseTokenizer)

Kuromoji has a search mode (default) that does segmentation useful for search. A heuristic

is used to segment compounds into its parts and the compound itself is kept as synonym.

Valid values for attribute mode are:

normal: regular segmentation

search: segmentation useful for search with synonyms compounds (default)

extended: same as search mode, but unigrams unknown words (experimental)

For some applications it might be good to use search mode for indexing and normal mode for

queries to reduce recall and prevent parts of compounds from being matched and highlighted.

Use <analyzer type="index"> and <analyzer type="query"> for this and mode normal in query.

Kuromoji also has a convenient user dictionary feature that allows overriding the statistical

model with your own entries for segmentation, part-of-speech tags and readings without a need

to specify weights. Notice that user dictionaries have not been subject to extensive testing.

User dictionary attributes are:

userDictionary: user dictionary filename

userDictionaryEncoding: user dictionary encoding (default is UTF-8)

See lang/userdict_ja.txt for a sample user dictionary file.

Punctuation characters are discarded by default. Use discardPunctuation="false" to keep them.

-->

<tokenizer class="solr.JapaneseTokenizerFactory" mode="search"/>

<!--<tokenizer class="solr.JapaneseTokenizerFactory" mode="search" userDictionary="lang/userdict_ja.txt"/>-->

<!-- Reduces inflected verbs and adjectives to their base/dictionary forms (辞書形) -->

<filter class="solr.JapaneseBaseFormFilterFactory"/>

<!-- Removes tokens with certain part-of-speech tags -->

<filter class="solr.JapanesePartOfSpeechStopFilterFactory" tags="lang/stoptags_ja.txt" />

<!-- Normalizes full-width romaji to half-width and half-width kana to full-width (Unicode NFKC subset) -->

<filter class="solr.CJKWidthFilterFactory"/>

<!-- Removes common tokens typically not useful for search, but have a negative effect on ranking -->

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_ja.txt" />

<!-- Normalizes common katakana spelling variations by removing any last long sound character (U+30FC) -->

<filter class="solr.JapaneseKatakanaStemFilterFactory" minimumLength="4"/>

<!-- Lower-cases romaji characters -->

<filter class="solr.LowerCaseFilterFactory"/>

</analyzer>

</fieldType>

<!-- Latvian -->

<dynamicField name="*_txt_lv" type="text_lv" indexed="true" stored="true"/>

<fieldType name="text_lv" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_lv.txt" />

<filter class="solr.LatvianStemFilterFactory"/>

</analyzer>

</fieldType>

<!-- Dutch -->

<dynamicField name="*_txt_nl" type="text_nl" indexed="true" stored="true"/>

<fieldType name="text_nl" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_nl.txt" format="snowball" />

<filter class="solr.StemmerOverrideFilterFactory" dictionary="lang/stemdict_nl.txt" ignoreCase="false"/>

<filter class="solr.SnowballPorterFilterFactory" language="Dutch"/>

</analyzer>

</fieldType>

<!-- Norwegian -->

<dynamicField name="*_txt_no" type="text_no" indexed="true" stored="true"/>

<fieldType name="text_no" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_no.txt" format="snowball" />

<filter class="solr.SnowballPorterFilterFactory" language="Norwegian"/>

<!-- less aggressive: <filter class="solr.NorwegianLightStemFilterFactory"/> -->

<!-- singular/plural: <filter class="solr.NorwegianMinimalStemFilterFactory"/> -->

</analyzer>

</fieldType>

<!-- Portuguese -->

<dynamicField name="*_txt_pt" type="text_pt" indexed="true" stored="true"/>

<fieldType name="text_pt" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_pt.txt" format="snowball" />

<filter class="solr.PortugueseLightStemFilterFactory"/>

<!-- less aggressive: <filter class="solr.PortugueseMinimalStemFilterFactory"/> -->

<!-- more aggressive: <filter class="solr.SnowballPorterFilterFactory" language="Portuguese"/> -->

<!-- most aggressive: <filter class="solr.PortugueseStemFilterFactory"/> -->

</analyzer>

</fieldType>

<!-- Romanian -->

<dynamicField name="*_txt_ro" type="text_ro" indexed="true" stored="true"/>

<fieldType name="text_ro" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_ro.txt" />

<filter class="solr.SnowballPorterFilterFactory" language="Romanian"/>

</analyzer>

</fieldType>

<!-- Russian -->

<dynamicField name="*_txt_ru" type="text_ru" indexed="true" stored="true"/>

<fieldType name="text_ru" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_ru.txt" format="snowball" />

<filter class="solr.SnowballPorterFilterFactory" language="Russian"/>

<!-- less aggressive: <filter class="solr.RussianLightStemFilterFactory"/> -->

</analyzer>

</fieldType>

<!-- Swedish -->

<dynamicField name="*_txt_sv" type="text_sv" indexed="true" stored="true"/>

<fieldType name="text_sv" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_sv.txt" format="snowball" />

<filter class="solr.SnowballPorterFilterFactory" language="Swedish"/>

<!-- less aggressive: <filter class="solr.SwedishLightStemFilterFactory"/> -->

</analyzer>

</fieldType>

<!-- Thai -->

<dynamicField name="*_txt_th" type="text_th" indexed="true" stored="true"/>

<fieldType name="text_th" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.ThaiTokenizerFactory"/>

<filter class="solr.LowerCaseFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_th.txt" />

</analyzer>

</fieldType>

<!-- Turkish -->

<dynamicField name="*_txt_tr" type="text_tr" indexed="true" stored="true"/>

<fieldType name="text_tr" class="solr.TextField" positionIncrementGap="100">

<analyzer>

<tokenizer class="solr.StandardTokenizerFactory"/>

<filter class="solr.TurkishLowerCaseFilterFactory"/>

<filter class="solr.StopFilterFactory" ignoreCase="false" words="lang/stopwords_tr.txt" />

<filter class="solr.SnowballPorterFilterFactory" language="Turkish"/>

</analyzer>

</fieldType>

<!-- Similarity is the scoring routine for each document vs. a query.

A custom Similarity or SimilarityFactory may be specified here, but

the default is fine for most applications.

For more info: http://lucene.apache.org/solr/guide/other-schema-elements.html#OtherSchemaElements-Similarity

-->

<!--

<similarity class="com.example.solr.CustomSimilarityFactory">

<str name="paramkey">param value</str>

</similarity>

-->

</schema>

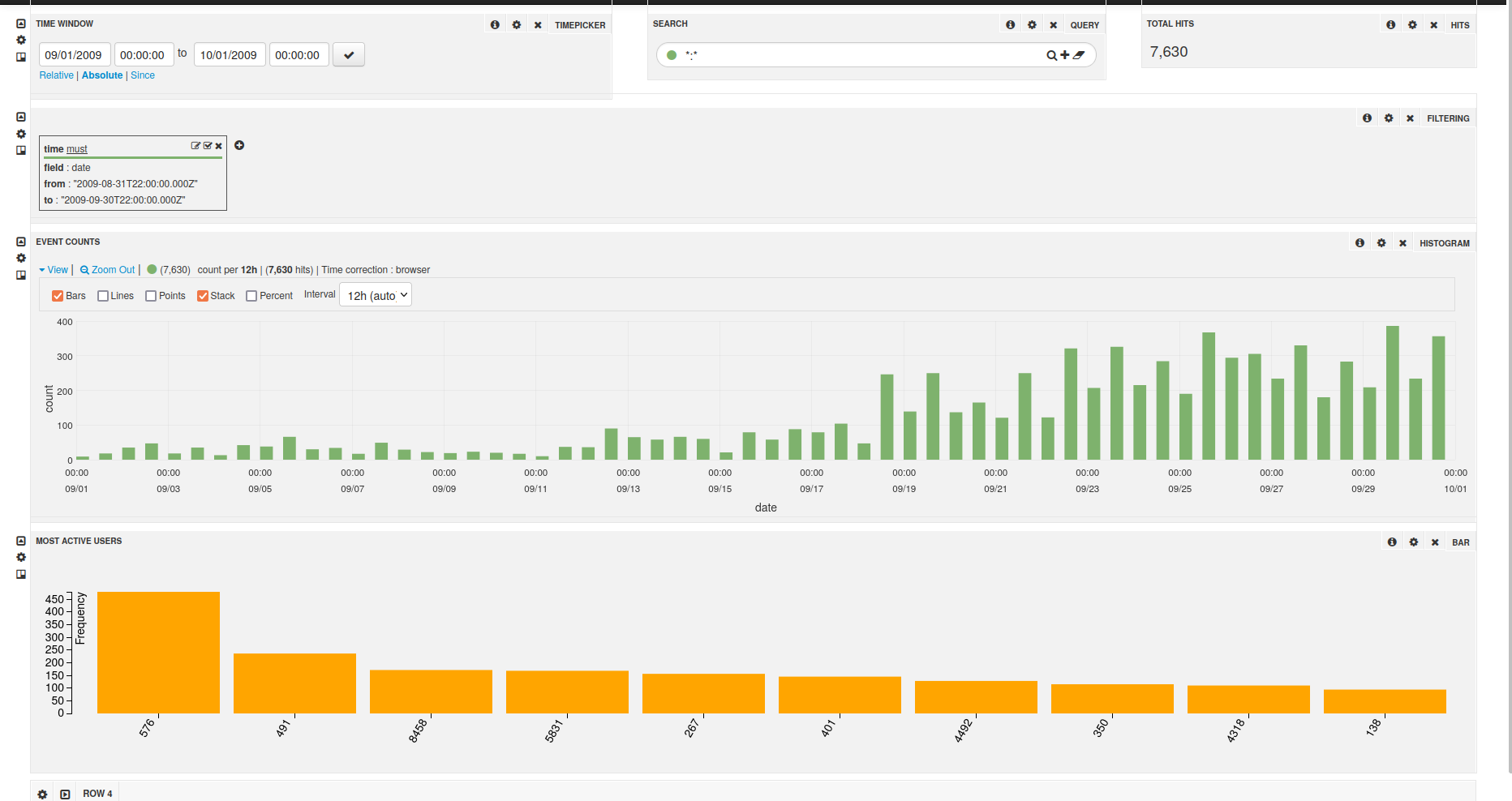

Banana

Banana (a port of Kibana) is available on the platform.

Banana provides users with a configurable dashboard allowing to query Solr indexes and visualise the output of those query.

The following is an example of a Banana dashboard.